Recently, I reported on the perils and promise of a project I have been working on with Dr. Frank Bonkowski. We created an automated film-analysis essay evaluation system to provide the correction and formative scoring of essays to his advanced English Second Language learners at CEGEP de St-Laurent, in Montreal, Canada. The idea was to have the Virtual Writing Tutor process an essay and give formative feedback on grammatical errors, content and organization, vocabulary use, and scholarship. He tried the automated formative evaluation system with his students and we have some preliminary results to share.

To see the first blog post on this topic and test the system with a sample essay, click below.

How will automated film-analysis essay evaluations help?

We expected the system would help in two ways.

First, we expected that the essay evaluation system would reduce the teacher’s workload by about 12.5 hours per week. Every time a teacher assigns an essay to his or her 120-150 students, the ten minutes spent correcting each essay adds about 25 hours of work to the teacher’s workload. Spread over two weeks, that’s about 2 hours a day of extra work. However, by using the regularly scheduled computer lab hour to have students submit their essays to the VirtualWritingTutor.com online essay evaluation system, we expected that the teacher would be able to forgo much of that correction work.

Secondly, we expected that students would be able to use the automatically generated feedback and scores on multiple drafts of their essay to improve the final result. That seems to be the case, also. Frank filmed semi-structured interviews with a handful of his students, asking them about their experience using the formative evaluation system. As we expected, students made multiple revisions of their essays, using the feedback and score from the Virtual Writing Tutor to guide the changes they made to their vocabulary, cohesion, language accuracy, thesis statement and topic sentences.

The experiment

Frank and I discussed the essay format, indicators of essay quality, and how students would use the system. Frank prepared his students with a series of lessons on researching and writing a film-analysis essay. Meanwhile, I worked with my programmer to define thresholds and comments based on essay features that we can detect with the Virtual Writing Tutor.

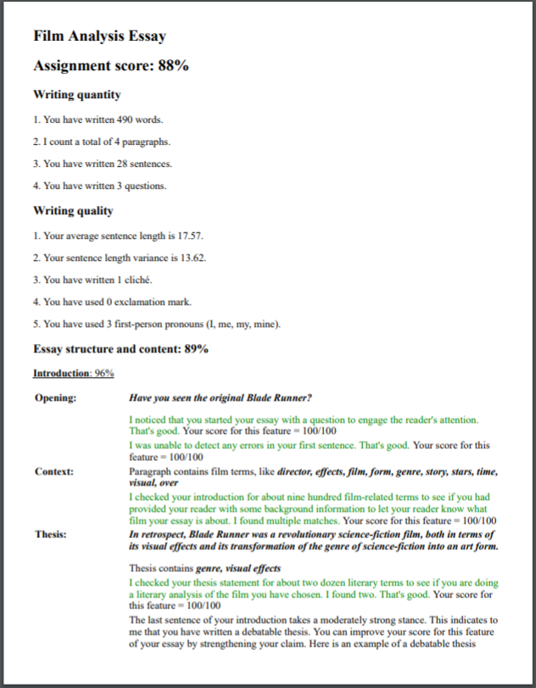

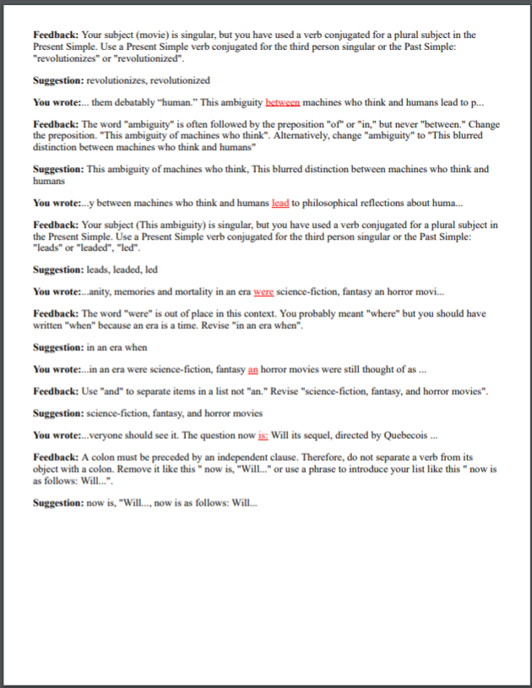

With their research in hand, the students wrote a draft of their essays in the multimedia lab, but instead of handing them in to the teacher and waiting two weeks for feedback, the students ran their essays through the Virtual Writing Tutor’s formative film-analysis essay evaluation system. In just 2 seconds, the system generated four pages of feedback, scores, and comments on how to improve their grades. Using formative evaluation from the VWT, students revised for a week and handed in their final draft for Frank to evaluate. The results are encouraging.

The student’s text

The first page of feedback

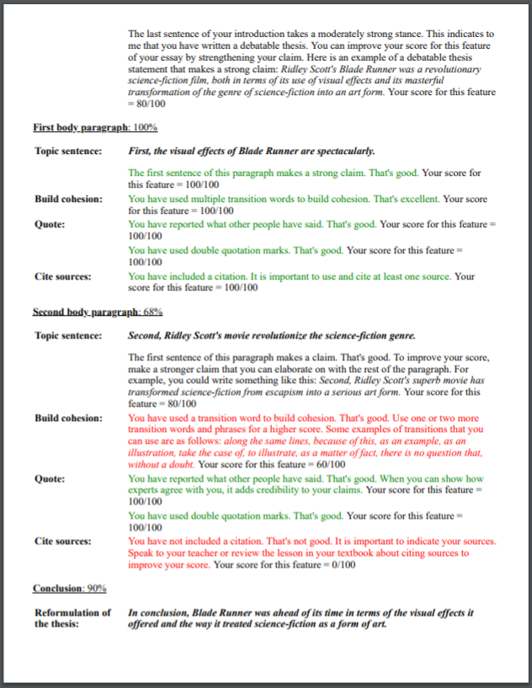

The second page of feedback

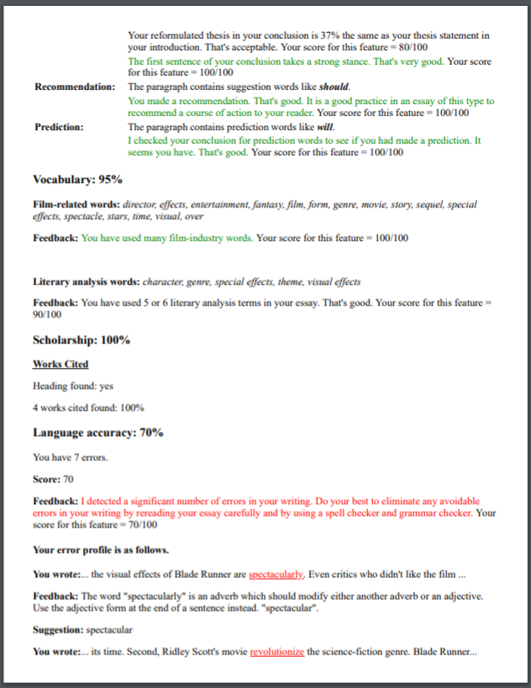

The third page of feedback

The fourth page of feedback

Bugs and catastrophic failures

Until the system was fully debugged, the axiom held true that human intelligence fails by degrees but Artificial Intelligence fails catastrophically. Some students encountered “Internal server error” messages when they submitted incomplete drafts. Others balked at mystifyingly low scores triggered by a misspelled heading for their “Works Cited” list, two apostrophes instead of quotation marks, camelcase or other unexpected characters in their in-text citations, lack of paragraphing, and other formatting errors. Obviously, human teachers are still better able at handling the unexpected chaos in student writing than the VWT.

We gleaned two insights from the failures. First, human coaching is essential for getting confused students to use the system successfully. (Incidentally, I have come to believe that the teacher’s prestige increases when students come to view him or her as an ally in a battle against the machine.) We expect that the second time they use it, they will understand the limitations of the machine’s AI and develop some persistence and patient problem-solving in the face of trouble.

Second, we saw the need for a method to anonymously capture texts that trigger system errors and bad feedback. To that end, we added a “Rate Feedback” button and popup that would allow students signal a positive or negative reaction (thumbs up or down) and leave comments to guide our debugging efforts.

Semi-structured interviews

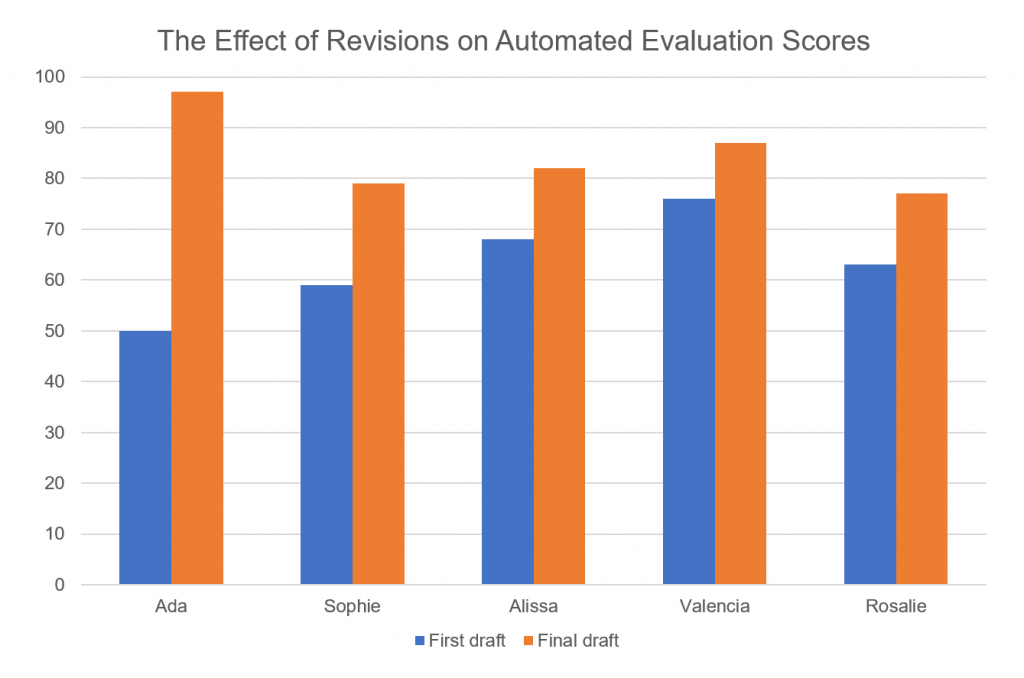

What follows below are the video recordings and key findings from those semi-structured interviews. A summary of the increases in scores between the first draft and the final draft calculated by the Virtual Writing Tutor’s film-analysis essay evaluation system is given for each student interviewed. On average, the five students that Frank interviewed improved their scores by 21.2%. Frank told me that he selected the students to interview based on their willingness to revise their drafts, so it is doubtful that all of his students increased their scores by 21%.

Student reaction #1: Ada

Ada improved her score by 47% from 50% to 97%, making 7 revisions. She found the automatically generated comments detailed and useful. She rewrote her topic sentences based on sentiment analysis feedback to make stronger claims. She also added film and literary analysis vocabulary to improve the depth of her analysis.

Student reaction #2: Sophie

Sophie improved her score by 20%, going from a 59% to a 79%. She added literary analysis vocabulary based on suggestions of words listed in the automatic comments. Sophie also made corrections based on the grammar checker feedback.

Student reaction #3: Alissa

Alissa improved her score by 14%, going from 68% to 82%. She made her topic sentences more specific based on the sentiment analysis feedback, and she used the examples of a model topic sentences in the comments to rewrite her own topic sentences. She rewrote her conclusion based on feedback on her word choice. She increased the number and specificity of transition words to build cohesion.

Student reaction #4: Valencia

Valencia revised her essay 2-3 times and improved her score by 11%., going from 76% to 87%. She used the grammar feedback to improve her language accuracy (spelling). She increased the number and specificity of transition words to build cohesion. She made her thesis stronger using sentiment analysis feedback and the example of a model topic sentence provided in the comments.

Student reaction #5: Rosalie

Rosalie improved her score by 14%, going from a 63% to a 77%. She added film and literary analysis vocabulary to increase her score. She notes that the system did not recognize her “Works Cited” section at first. Part of the increase in her score may have been due to our debugging efforts.

What have we learned about automated film-analysis essay evaluations?

Remember that this was an early pilot of the system. Though we are optimistic that automated formative essay evaluations are likely to become a more common feature of ESL instruction in the future, this was simply a proof-of-concept experiment. We see this as encouraging evidence that there could be a place for automated essay scoring for formative purposes in our own courses. There is still a lot to learn.

Frank’s comments

Frank shared some of his own observations with me. He told me that his score of each student’s essay did not exactly match the score generated by the VWT. The system cannot tell the difference between meaningful reflections on a film and well-structured blather.

Feedback overload

Frank also told me that the volume of feedback (four 8.5 x 11 inch pages at a time) was overwhelming for some students. Some students told Frank that they just wanted the system to tell them what to do next. This remark prompted me to ask my programmer to put the comments into collapsed accordion sections showing just the score for each of the four dimensions of evaluation: content and structure, vocabulary, language accuracy, and scholarship. In some cases, we just want the headlines of the news and not all the details all at once, right?

In the first iteration of the evaluation system, the comments were all regular black text. Since then, I have colour-coded the feedback so that green comments indicate 100%, black comments come with scores of 60%-90%, and red comments are for scores that less than 60%. In this way, students get a better sense of what requires immediate attention.

Reliability

In terms of the system helping a teacher determine a summative score for the final drafts of the film-analysis essays, Frank told me that the system seemed most reliable at scoring the range and depth of vocabulary. Because it involved a straight count of literary analysis words and film analysis words, it helped him make an evidence based judgement about how much of the literary analysis toolkit he had taught them in class. Sometimes, even a straight count of vocabulary items can indicate achievement. Essays with literary terms suggest that student can and are willing to use literary terms in an analysis of a film. That’s good.

Reliably less reliable were the automatically generated language accuracy scores. Foreign actors and directors’ names were flagged as errors even when correctly spelled. Whoops! I’m not too worried about this, though. The system will perform better in the future. I added a list of exceptions to the grammar checker’s internal spelling dictionary. However, the false alarms created some consternation for students. They were disappointed to see low language accuracy scores with no way to improve them. Frustrating. I get it.

Coverage

Frank noted that there were a number of glaring errors that the system missed. Obviously, the lack of error-detection coverage is a concern for me. I have been coding error detection rules for the past seven years, focusing mostly on high-beginner and low-intermediate learner errors. Now that Frank is using the system with his advanced students, I will have to write more rules to detect their most common errors. I will get there eventually.

First, do no harm

Frank certainly did not seem to think that the quality of his students writing declined. This automatic essay evaluation system is not doing anybody any harm.

Nick’s comments

On the whole, we are both pretty confident that the system is helping students with their writing. Students seem to be able to use the scores and the comments to make changes to their essays.

Gamification of revision

I suspect that there is a kind of gamification happening. The student might enjoy using a comment to get an extra percent or two. Each revision provides a little boost and creates a kind of ludic loop. You play, your points increase, and you want to keep playing. That’s good.

At junior college, students can sometimes lack college readiness. They can have the attitude of “I did my homework and now it’s the teacher’s problem.” Jock Mackay at a conference in 2014 called it efficiency syndrome. Students often try to get their school work done as quickly as possible. Think of it as optimal foraging. Students want a score above zero while expending as little energy as possible.

Gamifying essay writing with automated formative evaluations seems to keep even underachieving students coming back to the task because they get immediate feedback that the job is not quite done yet. They keep plugging away at it, willing to make a micro effort in order to get the next bump in their score.

Scholarship checker

Upon reflection, I admit that the “Scholarship” calculation is still very rudimentary. Frank told me that some students’ reference lists were very sloppy but scored 100% anyway.

I should explain that the system simply checks for a “Works Cited” heading and counts the number of non-empty lines below it. We have not made any attempt to check if the MLA style has been followed or not. At junior college, I am usually happy just to see that students are discovering and reporting other people’s ideas. They will have to up their game at university, but we can work on a more rigorous analysis using MLA or APA style requirements in the future.

Revision strategies

The fact that the students Frank interviewed reported making multiple revisions is the biggest news. Why? Getting students to reread and reflect on specific features of their writing is itself progress.

Frank’s students said that the scores with comments were helpful in guiding their revisions. This tells me that the cognitive load of explicit, detailed feedback will remain manageable for some students with just accordion buttons and colour-coded comments. If we discover that only the most highly proficient students can use the comments productively and other weaker students find it all too bewildering, we will have to reflect on how teachers give feedback to that kind of student. Experienced teachers’ comments with stressed out students will tend to focus on the next concrete step toward improvement–not the next 30 steps.

One strategy we could use might be an “easy-win” summary box of only the feedback with the biggest impact on the the student’s score. As a teacher faced with an anxious student, I would not want to try to give feedback on all aspects of the essay at once. Instead, I might draw the student’s attention to glaring omissions. For example, I might say, “You have made a good start, but you forgot your works-cited section. Adding that will increase your score by 25%!” Big score boosts can alleviate that sense of helplessness and allow for further revisions later. It is something to think about.